I had a chance to watch the movie Mercy since it created some heated discussions on Chinese social media. I was curious to see how Hollywood producers would handle the topic of AI in the future.

Side note: One thing I noticed is that MGM is now Amazon MGM Studios. I may have read the news before, but it is still surprising to see how Amazon has expanded into this area and eventually become the owner of one of the traditional six major movie studios.

The Premise

The basic idea of this movie is as follows: the AI takes over the role of judging whether people are guilty or not, and the main character needs to use 90 minutes to prove the AI wrong. I would not comment on some logical flaws but would rather discuss what I see would be actually achievable in the future based on the movie’s vision.

Tech Elements That Caught My Eye

1. Voice Dictation

In the movie, the character interacts with Mercy via voice (although a touch screen is provided as well). This is actually already being achieved. Some technical difficulties remain, like deciding whether the speaker is talking to send a message or just wants to delete the message, as shown in the movie. So the user’s intent is hard to judge purely from speech-to-text transcription.

I still firmly believe that text will remain the main modality for interaction, with wrappers using voice or other modalities.

2. Long Context Memory

It seems that Mercy has infinite memory, or at least within that 90-minute session. I wonder how it manages the memory state:

- Is it just because of a huge context window?

- Or are there more advanced algorithms to manage memory state?

There are numerous videos, calls, and evidence to process. Quite interesting.

3. Async Tool Calling

We can see some preliminary attempts right now when users interact with voice assistants that are doing tasks in the background. This is not new, but the fancy UI execution looks nice.

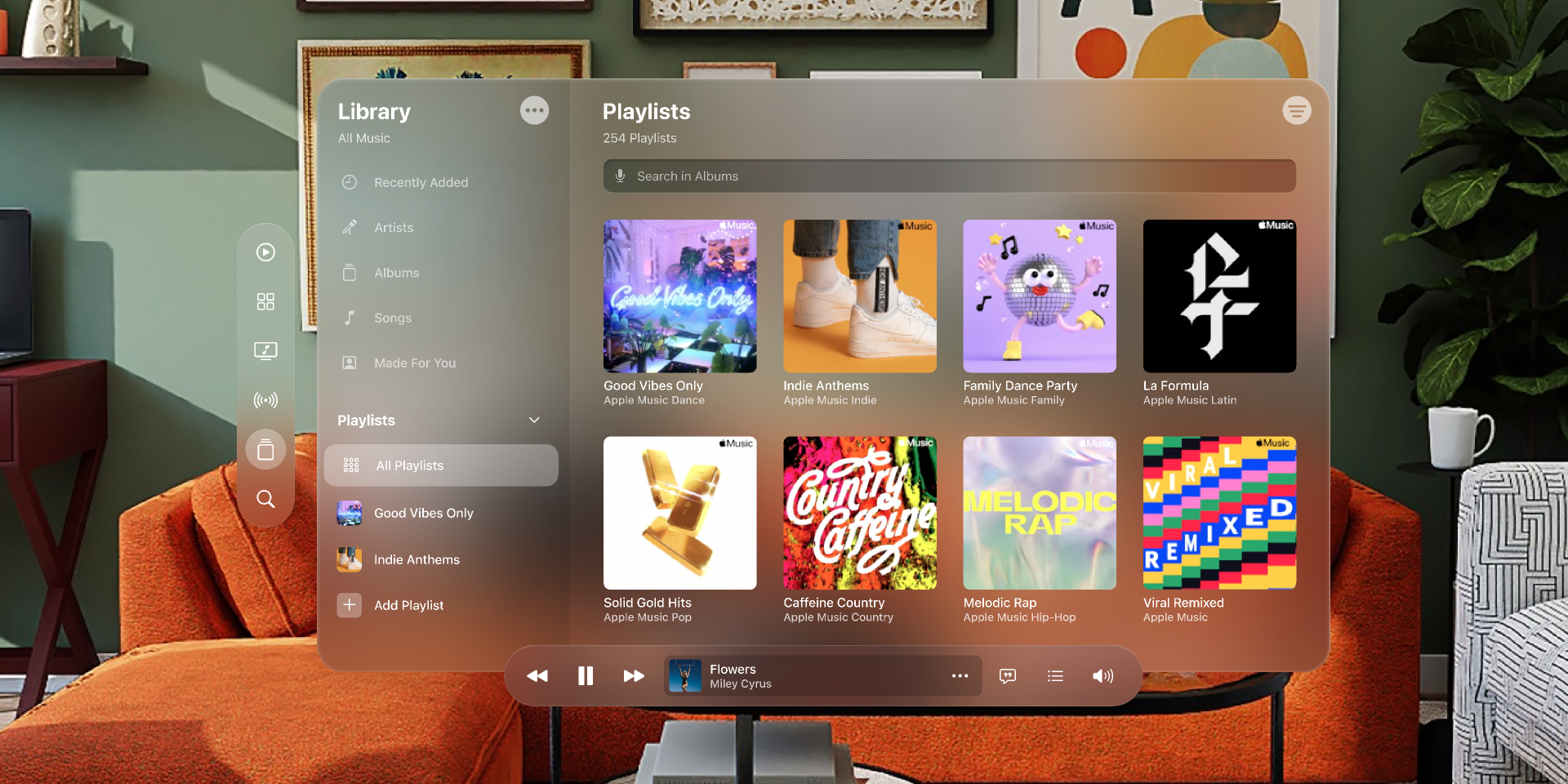

4. Spatial Computing

The movie UI looks like Apple’s Vision Pro, lol. But it has an immersive environment surrounding it—like a 4D experience for the character. Looking forward to this, though I doubt it will be achieved by 2029.

Final Thoughts

It seems like we have most of the elements for the movie to become reality, yet we lack a coherent agentic system or company to actually achieve all of them. Would transformers work this way? Maybe, but I hope there is new architecture as well—because token prediction really cannot work the same as human brain cells.