Info

- Title: Talk With Human-like Agents: Empathetic Dialogue Through Perceptible Acoustic Reception and Reaction

- Group: USTC

- Keywords: Speech as feedback

- Venue: ACL 2024

Comments

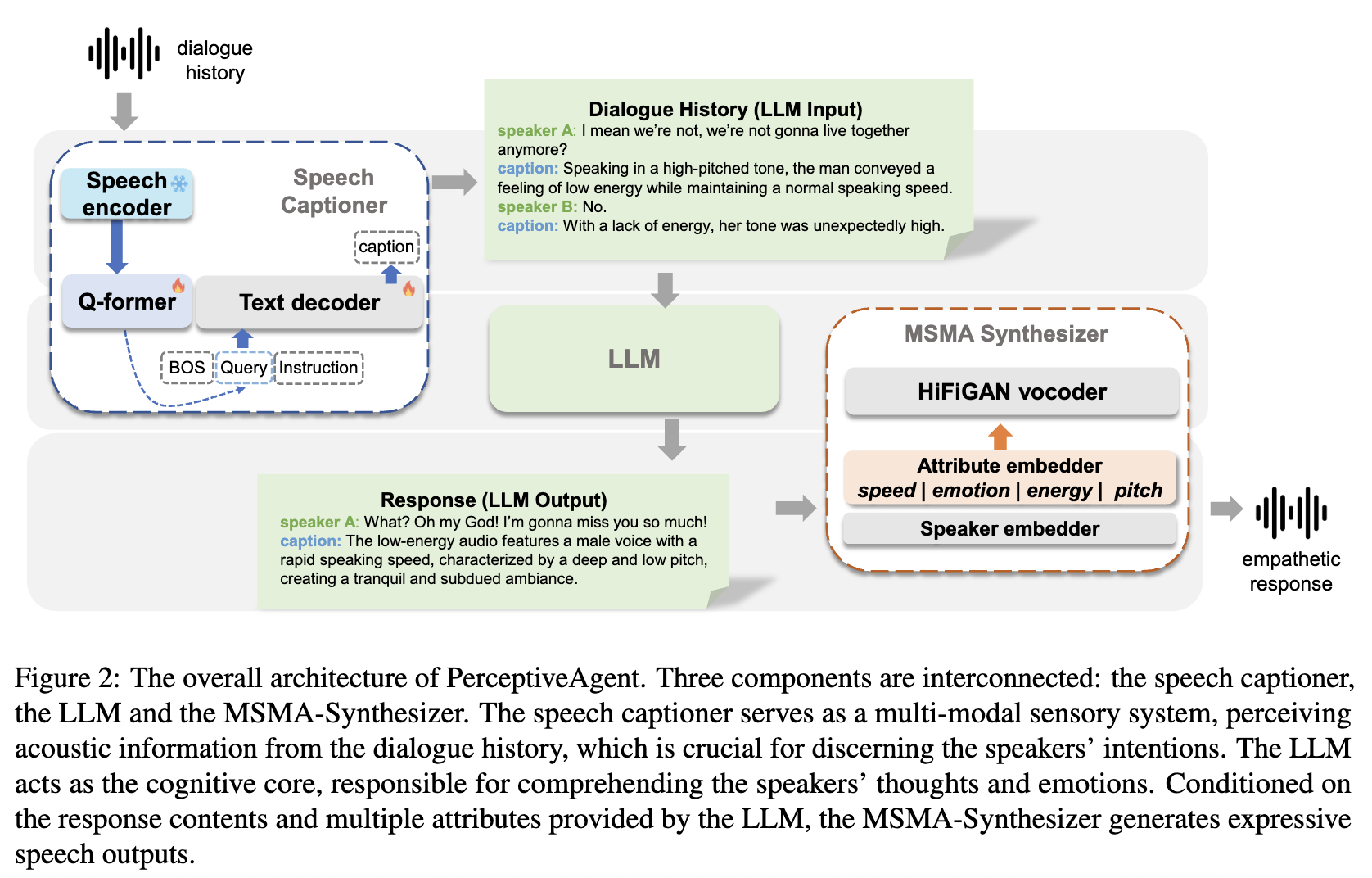

- They use a pre-trained Q-former and a GPT-2 decoder to “caption” the speech first.

- TextrolSpeech dataset, which consists of 236,220 pairs of captions and the corresponding speech sample

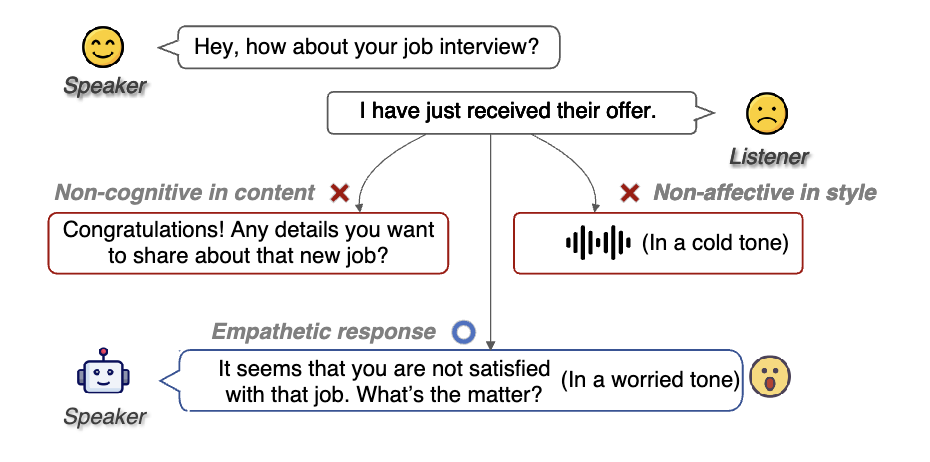

- The PerceptiveAgent’s evaluation metrics assess its cognitive empathy using BERTScore for text quality and affective empathy via an expressive style classifier’s accuracy for audio. MELD is used for BERTScore.