Info

- Title: ConceptAttention: Diffusion Transformers Learn Highly Interpretable Features

- Group: Gatech

- Keywords: visualization, attention

- Venue: ICML 2025

Comments

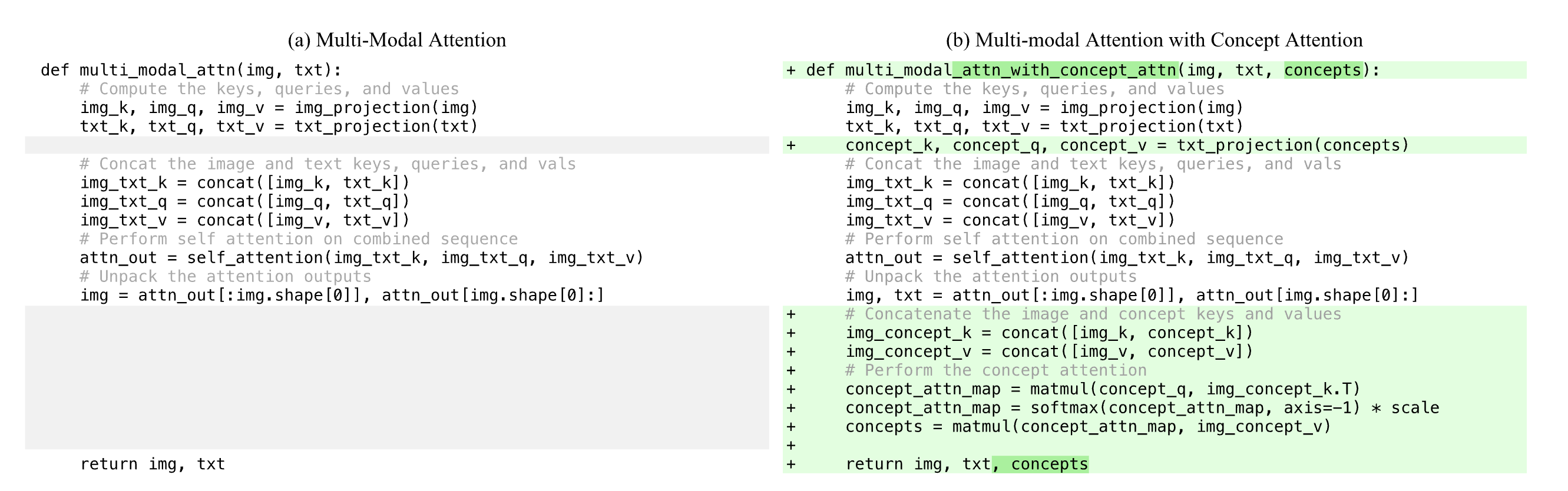

Concetpt Attention repurposes DiT attention layers to generate saliency maps by processing concept embeddings through a separate attention mechanism that interacts with image features.