Info

- Title: MAS-GPT: Training LLMs to Build LLM-based Multi-Agent Systems

- Group: Shanghai Jiao Tong University, Shanghai AI Lab

- Keywords: agent, multi-agent system, code generation

- Venue: Submitted to ICML 2025

Challenge

The authors tackle a fundamental challenge in the field of large language model (LLM) applications: how to build effective multi-agent systems (MAS) that can adapt to diverse tasks without high human effort or computational costs.

The key challenges identified include:

Inadaptability of existing MAS: Current approaches like MetaGPT, ChatDev, and AgentVerse use manually crafted, static agent configurations that lack flexibility to adapt to different tasks.

High computational costs: Adaptive MAS approaches (like GPTSwarm and DyLAN) require multiple LLM calls for each new task, making them expensive and time-consuming.

LLM knowledge gap: LLMs have limited knowledge about MAS generation, and there’s a lack of training data for teaching LLMs to create multi-agent systems.

Representation challenge: A unified way to represent executable MAS is needed to facilitate training and generation.

Method

The authors propose MAS-GPT, a novel approach that reframes the process of building a multi-agent system as a generative language task. Their methodology includes:

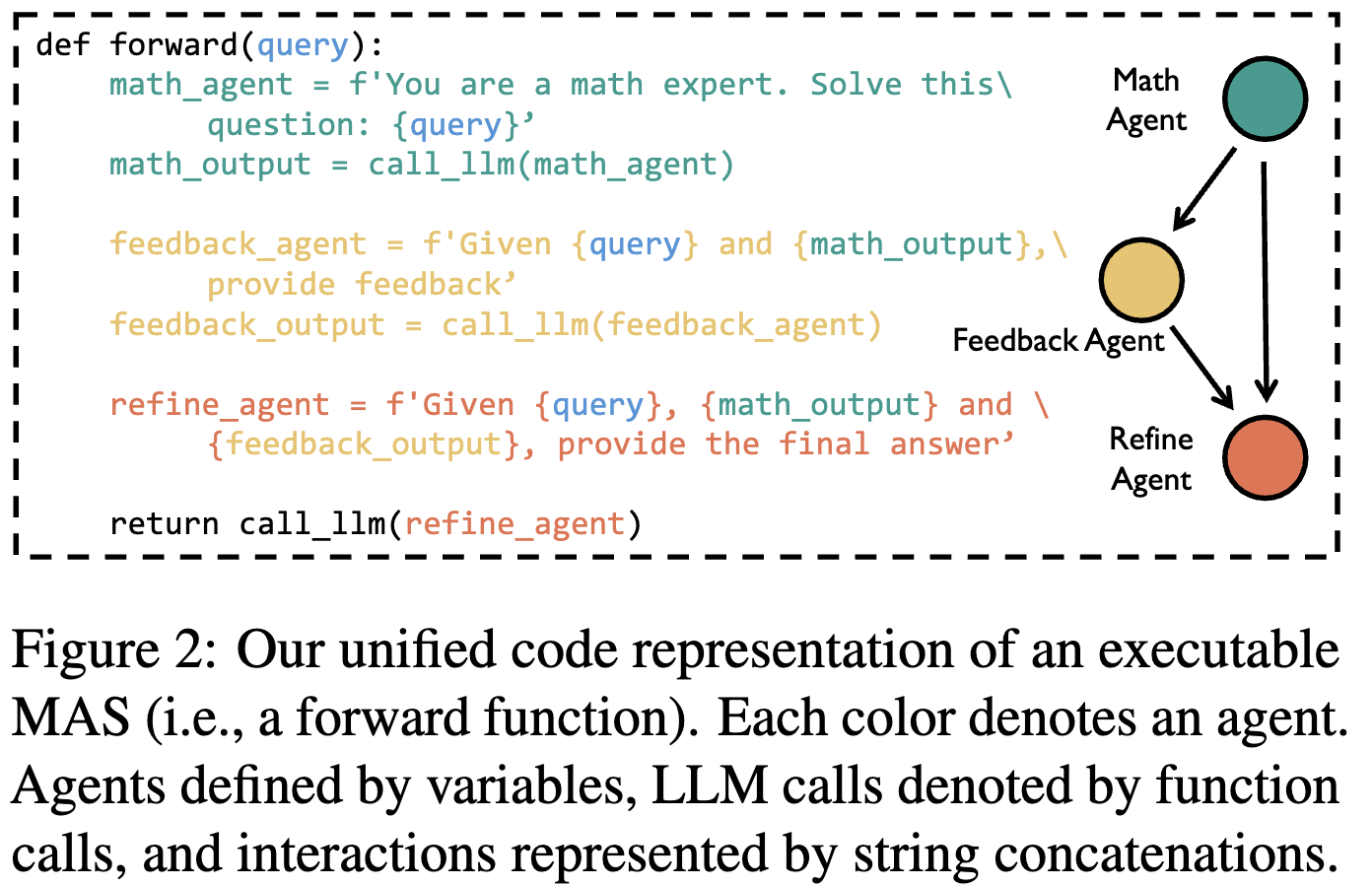

MAS as executable code: They unify the representation of MAS as Python code (a forward function), where:

- Agent prompts are defined as variables

- LLM calls are represented as functions

- Agent interactions are implemented through string concatenation

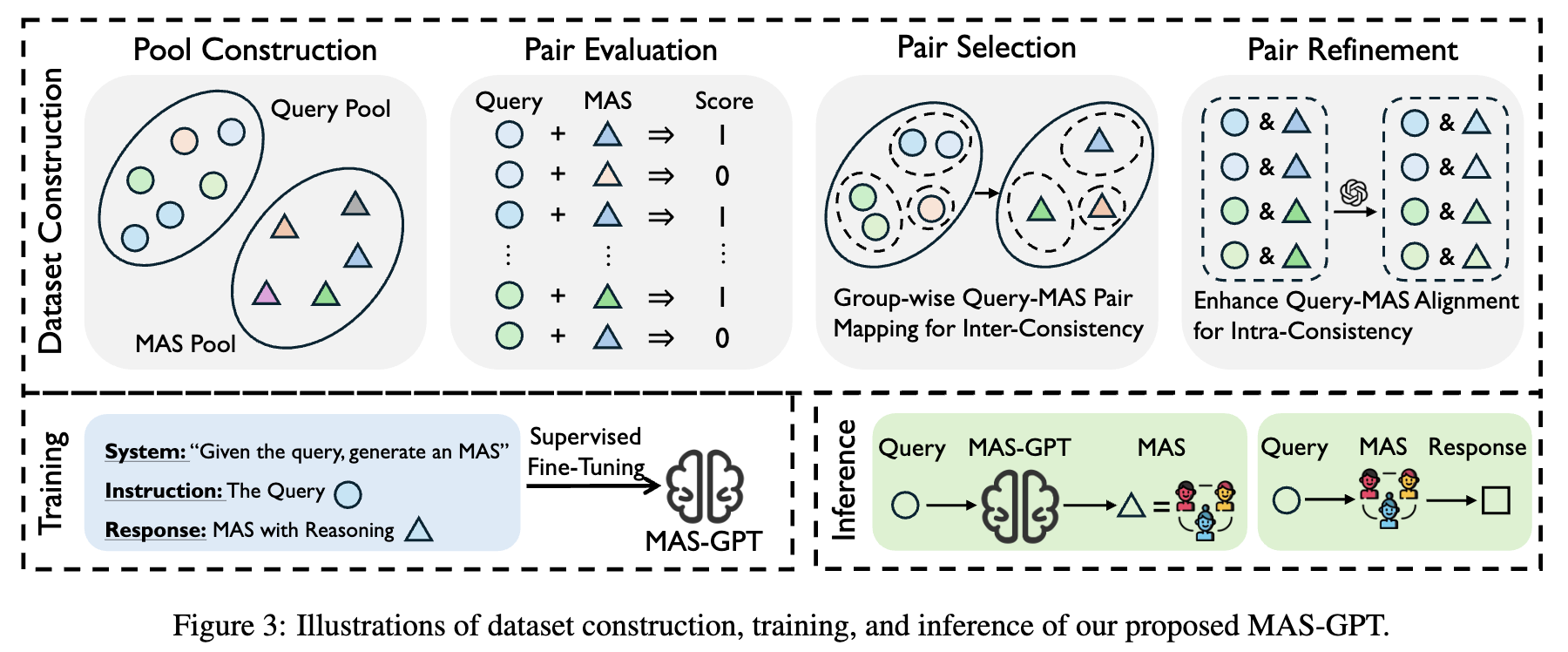

Consistency-oriented data construction pipeline:

- Construction: Build query and MAS pools with over 40 unique MAS designs

- Evaluation: Pair queries with MAS and evaluate their effectiveness

- Inter-consistency selection: Group similar queries and assign them the same high-performing MAS to maintain consistency

- Intra-consistency refinement: Use advanced LLMs to adjust MAS to be more query-dependent and introduce reasoning processes to explain connections between queries and MAS

Supervised fine-tuning: Train MAS-GPT (based on Qwen2.5-Coder-32B-Instruct) using the constructed dataset, where:

- System prompt: Describes MAS generation task

- Instruction: User query

- Response: Reasoning process + executable MAS code

Single-inference MAS generation: During inference, MAS-GPT generates an appropriate MAS for any given query in a single LLM call, which can then be immediately executed to produce a response.

Results

The authors evaluated MAS-GPT extensively with impressive results:

Generality across diverse tasks: Outperformed 10+ baseline methods across 8 benchmarks when using Llama-3-70B-Instruct as the MAS driver, with a 3.89% improvement over the second-best method.

Strong performance with different LLM backbones: Maintained superior performance regardless of which LLM (Qwen2.5-72B-Instruct, GPT-4o-mini) was used to drive the MAS.

Enhanced reasoning capabilities: Improved the performance of advanced reasoning LLMs like o1-preview and DeepSeek-R1 by 13.34% and 10.0% respectively on the challenging AIME-2024 mathematical benchmark.

Outperforming specialized systems: Even outperformed math-specific MAS optimizer AFlow on its own domain (MATH dataset) by 3.53%.

Efficiency: Achieved the best performance with the lowest inference cost compared to methods like AgentVerse, DyLAN, and GPTSwarm.

Ablation studies showed:

- Inter-consistency selection improved MATH performance by 8.39%

- Both MAS adjustment and reasoning process introduction contributed to performance gains

- Performance improves with more training data and larger model sizes

Insights

This paper presents several important insights for the future of LLM-based agent systems:

Reframing problem paradigm: By treating MAS creation as a generative language task, the authors transform a complex engineering problem into a more tractable machine learning task.

Data-driven MAS creation: The approach demonstrates that LLMs can learn to generate effective multi-agent systems from examples, reducing the need for manual configuration.

Balance between adaptability and efficiency: MAS-GPT achieves adaptability (customized MAS for each query) while maintaining efficiency (single inference vs. multiple calls).

Code as universal representation: Using code as the representation medium for MAS provides a standardized, executable format that bridges the gap between description and implementation.

Promising scaling trends: The consistent improvement with more data and larger models suggests that MAS-GPT’s approach will continue to benefit from general advances in the field.

Enhancing state-of-the-art models: The ability to further enhance the capabilities of already powerful reasoning LLMs indicates that MAS-GPT’s approach is complementary to existing advances in LLM capabilities.

Democratization potential: By reducing both the expertise required and computational cost for creating custom multi-agent systems, this approach could significantly broaden the application of MAS technology.

Comments

This is an interesting work that uses Qwen2.5-Coder-32B-Instruct to infer the multi-agent system in a code format and in a single inference.

The MAS-GPT dataset construction process first collected diverse queries from public datasets and created a pool of 40+ multi-agent systems represented as Python code. Then, each query-MAS pair was automatically evaluated, with similar queries grouped together and assigned the best-performing MAS to ensure cross-consistency. Finally, advanced LLMs were used to optimize each pair by adjusting the MAS to be more query-specific and generating explanatory reasoning, resulting in approximately 11,442 training samples.